Automated Classification of TA Comments on Student Writing

Overview

The STEM Writing Project is using classroom research and data science to better understand how students develop technical writing skills in large-enrollment biology courses. Our current NSF-sponsored grant asks if student development as writers can be accelerated using a mix of scripted active instruction, automated support of writing in progress, and holistic feedback.

One specific question we have is does the structure and main focus of instructors’ comments impact the rate of students’ writing development over time? To answer this we must extract instructor comments from student reports, categorize the comments according to their subject and structure, then correlate the types of comments instructors make with student performance.

Classifying TA comments has been informative, but doing it by hand is impractical. First, 10-12,000 comments must be scored EACH semester. Second, there is a significant risk of “coding drift” over time, reducing accuracy.

My project for the Faculty Learning Community for R was to create an automated comment classifier that can sort TA comments from student report into the same categories that we use for hand-coded data. This automated classifier will be used within the larger NSF-funded research project to:

- Improve both speed and consistency when classifying TA comments across multiple semesters.

- Monitor how the key concepts and points that TAs stress in comments on student writing change over time.

Outline of Work Plan

The plan is to:

- Compile an anonymized testing dataset of hand-coded TA comments from reports.

- Import TA comments and relevant metadata from student reports into R as a “tidy” dataframe.

- Explore comment data structure, n-gram frequencies, etc., and identify potential elements for feature engineering.

- Write a supervised text classification workflow that uses Naive Bayes to assign TA comments to our pre-defined categories.

- Test permutations of analysis parameters to optimize the classifier and establish baseline accuracy.

- Apply the optimized NB classifier to the original FULL comment dataset, identify which comments are being classified incorrectly, and look for potential patterns.

After completing the FLC project, my goals are to:

- Based on features identified in Steps #3 and #6, write a small set of rule- or REGEX pattern-based searches that can identify comments that are more likely to be classified incorrectly.

- Combine the rules/pattern-based pre-screening process with the optimized Naive Bayes classifier to create a vertically Ensemble Classifier.

- Re-validate the Ensemble Classifier against a subset of ~2000 independently hand-coded TA comments extracted from student lab reports for a different semester than the initial test dataset.

- Begin using the classifier to monitor TA comment patterns in the ongoing NSF-funded project.

Looking further ahead, I hope to use the work presented here as a baseline for evaluating other potential text classification models that are described in the Background.

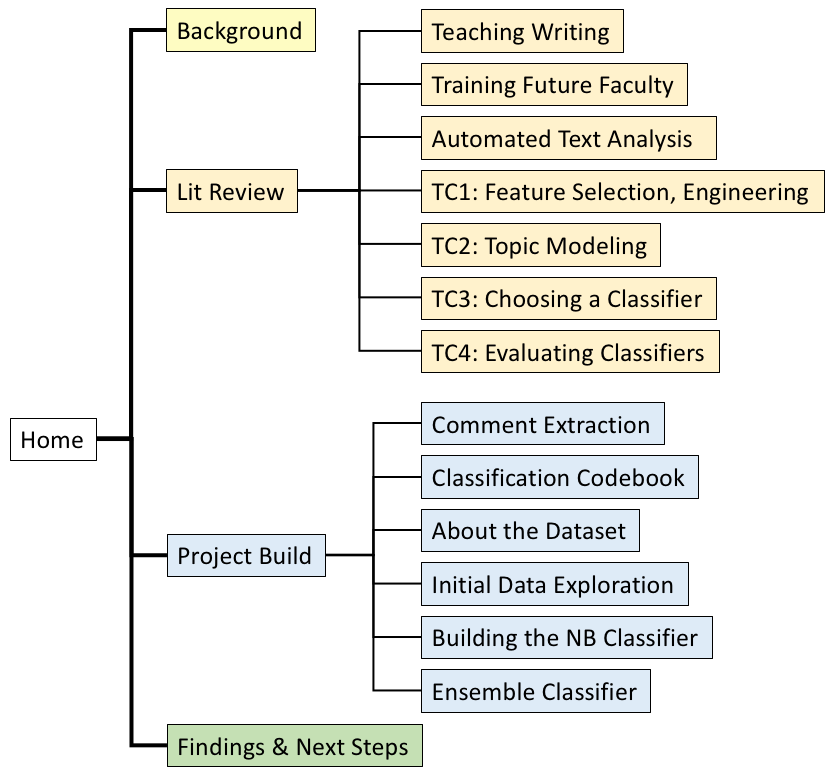

Project Site Map

Copyright © 2019 A. Daniel Johnson. All rights reserved.